- The Problem of Inconsistency is Solved: New AI techniques in tools like Midjourney and Stable Diffusion allow you to use a single reference image to generate countless new images of the same character, maintaining their facial features, style, and essence.

- You Are the Director: This technology transforms you from a mere prompter into a virtual director. You can control not just the character but also the camera angles, lighting, wardrobe, and setting to build a cohesive visual world, much like a real-world photoshoot or film set.

- Beyond Stills to Story: This “AI Photoshoot” workflow is a revolutionary tool for pre-production. It enables filmmakers and creators to develop lookbooks, craft detailed storyboards, and even generate keyframes for AI-driven video, dramatically streamlining the creative process from concept to execution.

For anyone who has spent hours wrestling with generative AI, the frustration is familiar. You craft the perfect character—the grizzled detective with a single cybernetic eye, the ethereal sorceress with silver-threaded hair—only to have the AI render a completely different person in the very next frame. The tell-tale signs of inconsistency have plagued AI art from the beginning, turning potential storyboards into a gallery of loosely related strangers. This “identity crisis” has been the primary barrier between generative AI as a novelty and its adoption as a serious tool for narrative storytelling. But that barrier is now crumbling. We are entering the era of the AI Photoshoot, a revolutionary workflow where a single, definitive image can become the “digital DNA” for an entire visual narrative, ensuring your hero looks like your hero in every single shot.

The Core Concept: Unlocking Character Consistency

The foundational shift enabling the AI photoshoot is the ability to lock in a character’s identity using a reference image. Instead of relying solely on the abstract interpretation of a text prompt, these new methods allow the AI to “see” and “understand” the specific facial features, hair, and overall vibe of a character from a source image. This process effectively anchors the AI’s creativity, giving it a persistent subject to place within an infinite variety of new scenes and scenarios. This is the end of generating a character’s long-lost twin; it’s the beginning of true digital casting.

This technology is no longer theoretical; it’s accessible right now through various platforms. Midjourney’s Character Reference (--cref) feature allows users to append a URL of a source image to their prompt, instructing the model to maintain that character’s likeness. In the more customizable world of Stable Diffusion, techniques like IP-Adapter and the training of specialized LoRAs (Low-Rank Adaptations) offer even finer control. For filmmakers, this is a pre-production game-changer. Imagine being able to generate a complete character lookbook for your protagonist, testing dozens of wardrobe and lighting setups in minutes, all before a single dollar is spent on physical production. Studios like Netflix and Unreal Engine are already exploring similar workflows to accelerate concept art and pre-visualization.

Mastering this technique is crucial because it elevates AI from a random image generator to a predictable production tool. It introduces reliability into a previously chaotic process, allowing creators to build a visual world with confidence. The practical tip here is to start with a superior source image. Your reference shot should be well-lit, high-resolution, and feature a clear, unobstructed view of the character’s face from a relatively neutral angle. The quality of this “digital seed” directly dictates the quality and consistency of every subsequent image in your AI photoshoot, so investing time in crafting the perfect reference is the most critical first step.

Directing the Virtual Set: Mastering Scene and Perspective

Once you have established a consistent character, the next step is to place them in a dynamic world, and this is where the “photoshoot” concept truly comes to life. The reference image controls the “who,” but your text prompt regains its power to control the “what,” “where,” and “how.” You can now direct the AI like a cinematographer, specifying camera angles, shot sizes, and composition. Prompts can be precisely tuned to generate a “dramatic low-angle shot,” an “intimate over-the-shoulder view,” or a “sweeping wide shot establishing the scene,” all while your character remains perfectly consistent. This level of control transforms the generation process from a lottery into a deliberate act of virtual filmmaking.

The real-world parallels are direct and powerful. Think of a fashion photographer on set, directing a model through a series of poses and environments. This is precisely what you are doing with your prompts. Advanced users can leverage tools within the Stable Diffusion ecosystem, such as ControlNet, to gain even more granular control. By providing a secondary input image—like a depth map, a canny edge outline, or even a simple stick-figure pose—you can dictate the exact posture and position of your character in the frame. You could, for instance, use a reference pose from a classic Alfred Hitchcock film to position your AI character, blending iconic cinematography with your unique subject.

This matters because it empowers solo creators and small teams with the capabilities of a full-scale production crew for the pre-production phase. You can effectively storyboard an entire sequence, experimenting with shot flow and visual rhythm without needing to hire actors or scout locations. A practical tip is to create a “pose library” using simple 3D modeling software or even by taking photos of yourself in various positions. You can feed these poses into ControlNet to ensure your character’s body language is as consistent and intentional as their facial identity, adding another layer of directorial control to your AI-driven narrative.

The Art of the Prompt: Directing Your Digital Talent

The reference image and the text prompt are not two separate inputs; they are partners in a creative dance. While the image provides the subject, the prompt provides the context, emotion, and action. Learning to write prompts that complement your visual reference is the key to unlocking truly compelling results. Your prompt is no longer burdened with describing every minute detail of your character’s face; it is freed up to focus entirely on the story you want to tell in that specific frame. This synergy is where true AI artistry begins.

Consider this example workflow. Your base reference is a stoic-looking space marine. Your first prompt might be: [character], medium shot, standing on the bridge of a starship, focused expression, cinematic blue lighting. For the next shot, you can keep the core elements but alter the narrative: [character], extreme close up, a single tear on their cheek, reflecting the red alert light of the console, shallow depth of field. The AI, anchored by the --cref or IP-Adapter, will render the same marine, but will interpret the new emotional and environmental cues from your text. This granular, shot-by-shot direction is a skill we explore deeply in our Midjourney Mastery course.

Why is this so important? Because it re-centers the creative process on the human artist. The technology handles the technical rendering of a consistent face, allowing you to focus on the higher-level creative decisions of storytelling, mood, and emotion. The practical tip here is to adopt a filmmaker’s mindset by creating a “shot list” before you begin generating. In a simple text document, write out a numbered list of the shots you want to create, each with a detailed prompt. This methodical approach forces you to think narratively and ensures your AI photoshoot tells a coherent story, rather than just producing a collection of disconnected but consistent images.

From Still Images to Motion: The Next Frontier

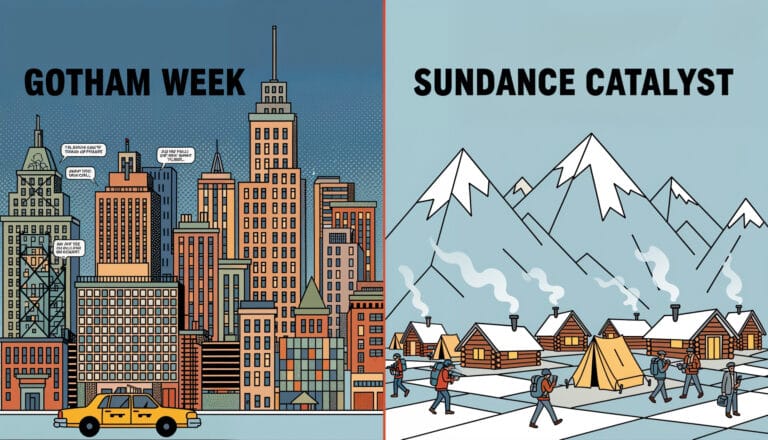

The AI photoshoot workflow is not an end in itself; it is the essential building block for the next revolution: consistent AI-generated video. The primary challenge for AI video tools like Runway, Pika, and OpenAI’s Sora has been maintaining character identity from one frame to the next. By using this photoshoot method to generate a series of consistent keyframes, creators can provide the AI video model with a much stronger foundation to build upon, resulting in more coherent and believable animated sequences. This bridges the gap between static concept art and living, breathing motion.

We are already seeing the first wave of this creative evolution at AI film festivals and online showcases. Independent creators are storyboarding entire short films using a consistent character, generating a dozen pivotal moments as still images. They then feed these images into an image-to-video platform to create short, animated clips, which are then edited together into a final film. This is the new frontier of Filmmaking AI, a topic we are passionate about at Design Hero. It’s a workflow that dramatically lowers the barrier to entry for animation and visual effects, empowering storytellers in unprecedented ways.

This evolution is significant because it democratizes the creation of sophisticated visual narratives. What once required a team of animators or a massive VFX budget can now be prototyped, or in some cases fully executed, by a single artist. A practical tip to get started is to think small. Don’t try to generate a feature film on your first attempt. Create a simple, three-shot “micro-scene”: an establishing shot, a medium shot of your character reacting, and a close-up on a key detail. Generate these three stills with your consistent character, then use a tool like Runway to add 4 seconds of subtle motion to each. This simple exercise will teach you the fundamentals of the entire still-to-motion pipeline.

Internal Links for Further Learning

- Manage your complex AI projects and creative assets with our new AI Render Pro universal prompt creator.

- Dive deeper into advanced prompting techniques in our comprehensive Midjourney Mastery course.

- Explore the future of cinematic storytelling on our Filmmaking AI content hub.

Conclusion

The era of the six-fingered, face-swapping AI character is over. We have moved from unpredictable image generation to deliberate, controlled virtual cinematography. The AI photoshoot workflow is more than just a clever trick; it’s a fundamental paradigm shift that places the power of narrative consistency directly into the hands of the creator. It allows you to build worlds, develop characters, and craft stories with a level of visual coherence that was previously unimaginable. The tools are here, the workflow is clear, and the blank canvas is waiting. Now it’s your turn to direct. Start building your visual narratives today, and consider using a tool like AI Render Pro to keep your projects organized and your vision on track.

FAQ

What are the best AI tools for character consistency?

The most popular and effective tools right now are Midjourney’s --cref (Character Reference) feature for its ease of use, Google Banana and Stable Diffusion workflows using extensions like IP-Adapter or custom-trained LoRAs for maximum control and customization.

Can I use a photo of a real person as a reference?

While technically possible, it is fraught with ethical and legal complexities. Using a person’s likeness without their explicit consent can violate privacy and publicity rights. The best practice is to first generate a unique, AI-native character and then use that image as your consistent reference to build your narrative world.

How will this impact traditional photography and filmmaking?

This technology is best viewed as a powerful augmentation tool, not a replacement. It will revolutionize pre-production, concept art, and storyboarding by making the process faster and more visually rich. However, it will not replace the nuance of a real actor’s performance, the vision of a skilled cinematographer on set, or the collaborative energy of a physical film production.

Discover more from Olivier Hero Dressen Blog: Filmmaking & Creative Tech

Subscribe to get the latest posts sent to your email.